基于虹软人脸识别的Rx封装,3分钟开发人脸识别APP

RxArcFace —— 基于虹软人脸识别SDK的Rx封装,快速开发人脸识别APP

本文由 ZEKI安卓学弟 写作

原作者:

ZEKI安卓学弟版权声明:本文版权归

ZEKI安卓学弟所有,未经许可,不得以任何形式转载

简介

虽然各厂商为我们提供了优质的人脸识别SDK,但其中包含了较多的无意义代码,例如错误处理,检测,剖析,而开发者在接入时往往不是非常关心这些事情,RxArcFace旨在将虹软人脸识别SDK的模板化操作封装,并结合RxJava2,带给开发者流畅的开发体验

关于虹软人脸识别SDK

虹软人脸人脸识别SDK:ArcFace 离线SDK,包含人脸检测、性别检测、年龄检测、人脸识别、图像质量检测、RGB活体检测、IR活体检测等能力,初次使用时需联网激活,激活后即可在本地无网络环境下工作,可根据具体的业务需求结合人脸识别SDK灵活地进行应用层开发。

基础版本暂不支持

图像质量检测以及离线激活;

0. 引子

人脸识别在当今已不是稀奇的功能,许多业务场景都能看到人脸识别的影子。作为移动应用开发者,选择接入合适的SDK能为我们带来更高效的开发体验;本文首先将以虹软人脸识别SDK基础方法为切入点逐渐探讨,但官方SDK未免过于繁琐,所以文章带领读者将其封装,基于官方方法打造自己的高可用,多场景可用的Util,使人脸开发无需繁琐的过程即可轻松接入。

SDK准备工作请参考

https://ai.arcsoft.com.cn/manual/docs#/139

https://ai.arcsoft.com.cn/manual/docs#/140 只需看3.1

本文将不再累述

1. 方法介绍(摘自 虹软安卓接入详情)

1.activeOnline

功能描述

用于在线激活SDK。

方法

int activeOnline(Context context, String appId, String sdkKey)初次使用SDK时需要对SDK先进行激活,激活后无需重复调用;

调用此接口时必须为联网状态,激活成功后即可离线使用;

参数说明

| 参数 | 类型 | 描述 |

|---|---|---|

| context | in | 上下文信息 |

| appId | in | 官网获取的APP_ID |

| sdkKey | in | 官网获取的SDK_KEY |

返回值

成功返回ErrorInfo.MOK、ErrorInfo.MERR_ASF_ALREADY_ACTIVATED,失败详见 错误码列表。

2.init

功能描述

初始化引擎。

该接口至关重要,清楚的了解该接口参数的意义,可以避免一些问题以及对项目的设计都有一定的帮助。

方法

int init(

Context context,

DetectMode detectMode,

DetectFaceOrientPriority detectFaceOrientPriority,

int detectFaceScaleVal,

int detectFaceMaxNum,

int combinedMask

)参数说明

| 参数 | 类型 | 描述 |

|---|---|---|

| context | in | 上下文信息 |

| detectMode | in | VIDEO模式:处理连续帧的图像数据 IMAGE模式:处理单张的图像数据 |

| detectFaceOrientPriority | in | 人脸检测角度,推荐单一角度检测; |

| detectFaceScaleVal | in | 识别的最小人脸比例(图片长边与人脸框长边的比值) VIDEO模式取值范围[2,32],推荐值为16 IMAGE模式取值范围[2,32],推荐值为32 |

| detectFaceMaxNum | in | 最大需要检测的人脸个数,取值范围[1,50] |

| combinedMask | in | 需要启用的功能组合,可多选 |

3.detectFaces(传入分离的图像信息数据)

方法

int detectFaces(

byte[] data,

int width,

int height,

int format,

List<FaceInfo> faceInfoList

)参数说明

| 参数 | 类型 | 描述 |

|---|---|---|

| data | in | 图像数据 |

| width | in | 图像宽度,为4的倍数 |

| height | in | 图像高度,在NV21格式下要求为2的倍数; BGR24/GRAY/DEPTH_U16格式无限制; |

| format | in | 图像的颜色格式 |

| faceInfoList | out | 检测到的人脸信息 |

返回值

成功返回ErrorInfo.MOK,失败详见 错误码列表。

detectFaceMaxNum参数的设置,对能否检测到人脸以及检测到几张人脸都有决定性的作用。

4.process(传入分离的图像信息数据)

方法

int process(

byte[] data,

int width,

int height,

int format,

List<FaceInfo> faceInfoList,

int combinedMask

)参数说明

| 参数 | 类型 | 描述 |

|---|---|---|

| data | in | 图像数据 |

| width | in | 图片宽度,为4的倍数 |

| height | in | 图片高度,在NV21格式下要求为2的倍数 BGR24格式无限制 |

| format | in | 支持NV21/BGR24 |

| faceInfoList | in | 人脸信息列表 |

| combinedMask | in | 检测的属性(ASF_AGE、ASF_GENDER、 ASF_FACE3DANGLE、ASF_LIVENESS),支持多选 检测的属性须在引擎初始化接口的combinedMask参数中启用 |

重要参数说明

- combinedMask

process接口中支持检测

ASF_AGE、ASF_GENDER、ASF_FACE3DANGLE、ASF_LIVENESS四种属性,但是想检测这些属性,必须在初始化引擎接口中对想要检测的属性进行初始化。

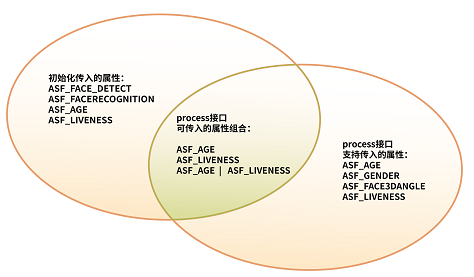

关于初始化接口中combinedMask和process接口中combinedMask参数之间的关系,举例进行详细说明,如下图所示:

process接口中combinedMask支持传入的属性有ASF_AGE、ASF_GENDER、ASF_FACE3DANGLE、ASF_LIVENESS。- 初始化中传入了

ASF_FACE_DETECT、ASF_FACERECOGNITION、ASF_AGE、ASF_LIVENESS属性。 - process可传入属性组合只有

ASF_AGE、ASF_LIVENESS、ASF_AGE | ASF_LIVENESS。

返回值

成功返回ErrorInfo.MOK,失败详见 错误码列表。

5.extractFaceFeature(传入分离的图像信息数据)

方法

int extractFaceFeature(

byte[] data,

int width,

int height,

int format,

FaceInfo faceInfo,

FaceFeature feature

)参数说明

| 参数 | 类型 | 描述 |

|---|---|---|

| data | in | 图像数据 |

| width | in | 图片宽度,为4的倍数 |

| height | in | 图片高度,在NV21格式下要求为2的倍数; BGR24/GRAY/DEPTH_U16格式无限制; |

| format | in | 图像的颜色格式 |

| faceInfo | in | 人脸信息(人脸框、人脸角度) |

| feature | out | 提取到的人脸特征信息 |

返回值

成功返回ErrorInfo.MOK,失败详见 错误码列表。

6.compareFaceFeature(可选择比对模型)

方法

int compareFaceFeature (

FaceFeature feature1,

FaceFeature feature2,

CompareModel compareModel,

FaceSimilar faceSimilar

)参数说明

| 参数 | 类型 | 描述 |

|---|---|---|

| feature1 | in | 人脸特征 |

| feature2 | in | 人脸特征 |

| compareModel | in | 比对模型 |

| faceSimilar | out | 比对相似度 |

返回值

成功返回ErrorInfo.MOK,失败详见 错误码列表。

使用 RxArcFace

在app的build.gradle中

allprojects {

repositories {

...

maven { url 'https://jitpack.io' }

}

}

dependencies {

implementation 'com.github.ZYF99:RxArcFace:1.0'

implementation 'com.github.ZYF99:RxArcFace:1.0'

//RxJava2

implementation "io.reactivex.rxjava2:rxjava:2.2.13"

implementation 'io.reactivex.rxjava2:rxandroid:2.1.1'

implementation "io.reactivex.rxjava2:rxkotlin:2.3.0"

}添加权限

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.CAMERA"/>

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.autofocus"/>将需要匹配的数据类实现 IFaceDetect 接口

data class Person(

val id: Long? = null,

val name: String? = null,

val avatar: String? = null, //添加avatar属性

var faceCode: String? = null //添加faceCode可变属性

) : IFaceDetect {

override fun getFaceCodeJson(): String? {

return faceCode

}

override fun getAvatarUrl(): String? {

return avatar

}

override fun bindFaceCode(faceCodeJson: String?) {

faceCode = faceCodeJson

}

}也许你会问为什么我还需要自己添加faceCode属性和avatar属性呢?

其实并不是需要你自己去添加,往往我们在接入人脸识别功能时,我们早就有了自己的数据类,这跟数据类很可能是后端返回给我们的,而我们有时候很难决定后端会给我们什么样的数据, faceCode 和 avatar 只是说我们的数据类必须有这两种东西(一个人脸特征,一个头像),它们可以是你之前就有的,也可以是你后来添加的,假如后端本身就返回给我们一个 属性作为人脸特征,那么我们直接在 getFaceCodeJson 返回它就好,avatar同理。

摄像头采集图像

private var camera: Camera? = null

//初始化相机、surfaceView

private fun initCameraOrigin(surfaceView: SurfaceView) {

surfaceView.holder.addCallback(object : SurfaceHolder.Callback {

override fun surfaceCreated(holder: SurfaceHolder) {

//surface创建时执行

if (camera == null) {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

camera = openCamera(this@MainActivity) { data, camera, resWidth, resHeight ->

if (data != null && data.size > 1) {

//TODO 人脸匹配

}

}

}

}

//调整摄像头方向

camera?.let { setCameraDisplayOrientation(this@MainActivity, it) }

//开始预览

holder.let { camera?.startPreview(it) }

}

override fun surfaceChanged(

holder: SurfaceHolder,

format: Int,

width: Int,

height: Int

) {

}

override fun surfaceDestroyed(holder: SurfaceHolder) {

camera.releaseCamera()

camera = null

}

})

}

override fun onPause() {

camera?.setPreviewCallback(null)

camera.releaseCamera()//释放相机资源

camera = null

super.onPause()

}

override fun onDestroy() {

camera?.setPreviewCallback(null)

camera.releaseCamera()//释放相机资源

camera = null

super.onDestroy()

}使用人脸识别匹配

if (data != null && data.size > 1) {

matchHumanFaceListByArcSoft(

data = data,

width = resWidth,

height = resHeight,

humanList = listOfPerson,

doOnMatchedHuman = { matchedPerson ->

Toast.makeText(

this@MainActivity,

"匹配到${matchedPerson.name}",

Toast.LENGTH_SHORT

).show()

isFaceDetecting = false

},

doOnMatchMissing = {

Toast.makeText(

this@MainActivity,

"没匹配到人,正在录入",

Toast.LENGTH_SHORT

).show()

//为一个新的人绑定人脸数据

bindFaceCodeByByteArray(

Person(name = "帅哥"),

data,

resWidth,

resHeight

).doOnSuccess {

//往当前列表加入新注册的人

listOfPerson.add(it)

Toast.makeText(

this@MainActivity,

"录入成功",

Toast.LENGTH_SHORT

).show()

isFaceDetecting = false

}.subscribe()

},

doFinally = { }

)

}完整的Activity代码

package com.lxh.rxarcface

import android.hardware.Camera

import android.os.Build

import androidx.appcompat.app.AppCompatActivity

import android.os.Bundle

import android.util.Log

import android.view.SurfaceHolder

import android.view.SurfaceView

import android.widget.Toast

import com.lxh.rxarcfacelibrary.bindFaceCodeByByteArray

import com.lxh.rxarcfacelibrary.initArcSoftEngine

import com.lxh.rxarcfacelibrary.isFaceDetecting

import com.lxh.rxarcfacelibrary.matchHumanFaceListByArcSoft

class MainActivity : AppCompatActivity() {

private var camera: Camera? = null

private var listOfPerson: MutableList<Person> = mutableListOf()

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

//初始化人脸识别引擎

initArcSoftEngine(

this,

"输入官网申请的appid",

"输入官网申请的"

)

//初始化摄像头

initCameraOrigin(findViewById(R.id.surface_view))

}

//初始化相机、surfaceView

private fun initCameraOrigin(surfaceView: SurfaceView) {

surfaceView.holder.addCallback(object : SurfaceHolder.Callback {

override fun surfaceCreated(holder: SurfaceHolder) {

//surface创建时执行

if (camera == null) {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

camera =

openCamera(this@MainActivity) { data, camera, resWidth, resHeight ->

if (data != null && data.size > 1) {

matchHumanFaceListByArcSoft(

data = data,

width = resWidth,

height = resHeight,

humanList = listOfPerson,

doOnMatchedHuman = { matchedPerson ->

Toast.makeText(

this@MainActivity,

"匹配到${matchedPerson.name}",

Toast.LENGTH_SHORT

).show()

isFaceDetecting = false

},

doOnMatchMissing = {

Toast.makeText(

this@MainActivity,

"没匹配到人,正在录入",

Toast.LENGTH_SHORT

).show()

//为一个新的人绑定人脸数据

bindFaceCodeByByteArray(

Person(name = "帅哥"),

data,

resWidth,

resHeight

).doOnSuccess {

//往当前列表加入新注册的人

listOfPerson.add(it)

Toast.makeText(

this@MainActivity,

"录入成功",

Toast.LENGTH_SHORT

).show()

isFaceDetecting = false

}.subscribe()

},

doFinally = { }

)

}

}

}

}

//调整摄像头方向

camera?.let { setCameraDisplayOrientation(this@MainActivity, it) }

//开始预览

holder.let { camera?.startPreview(it) }

}

override fun surfaceChanged(

holder: SurfaceHolder,

format: Int,

width: Int,

height: Int

) {

}

override fun surfaceDestroyed(holder: SurfaceHolder) {

camera.releaseCamera()

camera = null

}

})

}

override fun onPause() {

camera?.setPreviewCallback(null)

camera.releaseCamera()//释放相机资源

camera = null

super.onPause()

}

override fun onDestroy() {

camera?.setPreviewCallback(null)

camera.releaseCamera()//释放相机资源

camera = null

super.onDestroy()

}

}注意:Demo没有检查相机权限,自行在设置去打开权限或者自己添加权限检测

封装介绍

直接SDK的使用请参考官方Demo,在注册SDK服务时下载即可。这里不介绍Demo使用,如果需要直接参考官方写的Demo即可,另外的,用我最后的封装会比直接使用官方SDK简单得多

1.引入依赖

https://ai.arcsoft.com.cn/manual/docs#/140: 请确保已按照3.1引入虹软依赖配置

//RxJava2

implementation "io.reactivex.rxjava2:rxjava:2.2.13"

implementation "io.reactivex.rxjava2:rxkotlin:2.3.0"

//Json Serializer(工具类中使用到了Moshi作为序列化工具,可自行替换为其他工具)

implementation("com.squareup.moshi:moshi-kotlin:1.9.2")

kapt("com.squareup.moshi:moshi-kotlin-codegen:1.9.2")

//Glide(工具类中使用到了Glide作为序列化工具,可自行替换为其他工具)

implementation "com.github.bumptech.glide:glide:4.10.0"

//RxJava2

implementation "io.reactivex.rxjava2:rxjava:2.2.13"

implementation 'io.reactivex.rxjava2:rxandroid:2.1.1'

implementation "io.reactivex.rxjava2:rxkotlin:2.3.0"

2.实现工具类

定义全局变量

//(虹软)判断为同一人的阈值,大于此值即可判断为同一人

const val ARC_SOFT_VALUE_MATCHED = 0.8f

private var context: Context? = null

//虹软人脸初始化分析引擎(用于整个APP种需要解析人脸图片为虹软人脸特征数据所使用的引擎)

//使用两个引擎的原因是:我们从网络或者自己的服务器获取的人脸照片人脸方向一定正常,但Android本身Camera获取到的图像旋转角度不定,初始化时又必须给一个旋转角度

private val faceDetectEngine = FaceEngine()

//虹软人脸识别引擎(用于人脸识别使用的引擎)

private val faceEngine = FaceEngine()

//上次检测人脸的时间戳

var lastFaceDetectingTime = 0L

//是否正在检测(很重要,若同一时间多个图片交给SDK检测,C++底层将会内存溢出)

var isFaceDetecting = false初始化

/**

* (虹软)初始化人脸识别引擎

* */

fun initArcSoftEngine(

contextTemp: Context,

arcAppId: String, //在官网申请的 APPID

arcSdkKey: String //在官网申请的 APPKEY

) {

context = contextTemp

val activeCode = FaceEngine.activeOnline(

context,

arcAppId,

arcSdkKey

)

Log.d("激活虹软,结果码:", activeCode.toString())

//人脸识别引擎

val faceEngineCode = faceEngine.init(

context,

DetectMode.ASF_DETECT_MODE_IMAGE, //检测模式,可选 ASF_DETECT_MODE_VIDEO、ASF_DETECT_MODE_IMAGE

DetectFaceOrientPriority.ASF_OP_270_ONLY, //检测角度,不清楚角度可将模式改为VIDEO模式并将角度设置为 ASF_OP_ALL_OUT(全角度检测)

16,

6,

FaceEngine.ASF_FACE_RECOGNITION or FaceEngine.ASF_AGE or FaceEngine.ASF_FACE_DETECT or FaceEngine.ASF_GENDER or FaceEngine.ASF_FACE3DANGLE

)

//人脸图片分析引擎

faceDetectEngine.init(

context,

DetectMode.ASF_DETECT_MODE_VIDEO,

DetectFaceOrientPriority.ASF_OP_ALL_OUT,

16,

6,

FaceEngine.ASF_FACE_RECOGNITION or FaceEngine.ASF_AGE or FaceEngine.ASF_FACE_DETECT or FaceEngine.ASF_GENDER or FaceEngine.ASF_FACE3DANGLE

)

Log.d("FaceEngine init", "initEngine: init $faceEngineCode")

when (faceEngineCode) {

ErrorInfo.MOK,

ErrorInfo.MERR_ASF_ALREADY_ACTIVATED -> {

}

else -> showToast("初始化虹软人脸识别错误,Code${faceEngineCode}")

}

}接下来我们需要定义一个规范,通过上面的API介绍我们知道识别其实是通过

compareFaceFeature()方法比较两个FaceFeature对象,所以我们需要比较的数据类比如 一个data class Person就需要里面有一个类型为FaceFeature属性。但我们可能拥有多个这样的 class ,比如Student、Teacher,他们都是毫无关系的数据类,于是我用一个接口来要求每个需要人脸识别的类去实现。

定义识别实体类的接口

/**

* 作为人脸识别数据类必须实现的接口

* */

interface IFaceDetect {

//获取特征码Json

fun getFaceCodeJson(): String?

//获取头像URL

fun getAvatarUrl(): String?

//绑定特征码

fun bindFaceCode(faceCodeJson: String?)

}通过图片byte数组获取FaceFeature

/**

* (虹软)通过人员人脸图片byteArray,为人员绑定上特征码

* */

@Synchronized

fun <T : IFaceDetect> bindFaceCodeByByteArray(

person: T,

imageByteArray: ByteArray,

imageWidth: Int,

imageHeight: Int

): Single<T> {

return getArcFaceCodeByImageData(

imageByteArray,

imageWidth,

imageHeight

).flatMap {

Single.just(person.apply {

bindFaceCode(it)

})

}.subscribeOn(Schedulers.io())

.observeOn(AndroidSchedulers.mainThread())

}

/**

* 通过图片数据加载为ArcFaceCode

* */

private fun getArcFaceCodeByImageData(

imageData: ByteArray,

imageWidth: Int,

imageHeight: Int

): Single<String> {

return Single.create { emitter ->

val detectStartTime = System.currentTimeMillis()

//人脸列表

val faceInfoList: List<FaceInfo> = mutableListOf()

//⼈脸检测

val detectCode = faceDetectEngine.detectFaces(

imageData,

imageWidth,

imageHeight,

FaceEngine.CP_PAF_NV21,

faceInfoList

)

if (detectCode == 0) {

//人脸剖析

val faceProcessCode = faceDetectEngine.process(

imageData,

imageWidth,

imageHeight,

FaceEngine.CP_PAF_NV21,

faceInfoList,

FaceEngine.ASF_AGE or FaceEngine.ASF_GENDER or FaceEngine.ASF_FACE3DANGLE

)

//剖析成功

if (faceProcessCode == ErrorInfo.MOK && faceInfoList.isNotEmpty()) {

//识别到的人脸特征

val currentFaceFeature = FaceFeature()

//人脸特征分析

val res = faceDetectEngine.extractFaceFeature(

imageData,

imageWidth,

imageHeight,

FaceEngine.CP_PAF_NV21,

faceInfoList[0],

currentFaceFeature

)

//人脸特征分析成功

if (res == ErrorInfo.MOK) {

Log.d(

"!!人脸转换耗时",

"${System.currentTimeMillis() - detectStartTime}"

)

Schedulers.io().scheduleDirect {

emitter.onSuccess(globalMoshi.toJson(currentFaceFeature))

}

}

} else {

Log.d("ARCFACE", "face process finished , code is $faceProcessCode")

Schedulers.io().scheduleDirect {

emitter.onSuccess("")

}

}

} else {

Log.d(

"ARCFACE",

"face detection finished, code is " + detectCode + ", face num is " + faceInfoList.size

)

Schedulers.io().scheduleDirect {

emitter.onSuccess("")

}

}

}

}

通过图片url获取FaceFeature

/**

* (虹软)通过人员人脸图片url,获取带特征码人员列表

* */

@Synchronized

fun <T : IFaceDetect> detectPersonAvatarAndBindFaceFeatureCodeByArcSoft(

personListTemp: List<T>?

): Single<List<T>> {

return Observable.fromIterable(personListTemp)

.flatMapSingle { person ->

getArcFaceCodeByPicUrl(person.getAvatarUrl())

.map { arcFaceCodeJson ->

person.bindFaceCode(arcFaceCodeJson)

person

}

}

.toList()

.subscribeOn(Schedulers.io())

}

/**

* 通过照片加载为ArcFaceCode

* */

private fun getArcFaceCodeByPicUrl(

picUrl: String?

): Single<String> {

return Single.create { emitter ->

Glide.with(context!!)

.asBitmap()

.load(picUrl)

.listener(object : RequestListener<Bitmap> {

override fun onLoadFailed(

e: GlideException?,

model: Any?,

target: Target<Bitmap>?,

isFirstResource: Boolean

): Boolean {

emitter.onSuccess("")

return false

}

override fun onResourceReady(

resource: Bitmap?,

model: Any?,

target: Target<Bitmap>?,

dataSource: DataSource?,

isFirstResource: Boolean

): Boolean {

return false

}

})

.into(object : SimpleTarget<Bitmap>() {

@Synchronized

override fun onResourceReady(

bitMap: Bitmap,

transition: Transition<in Bitmap>?

) {

val detectStartTime = System.currentTimeMillis()

//人脸列表

val faceInfoList: List<FaceInfo> = mutableListOf()

val faceByteArray = getPixelsBGR(bitMap)

//⼈脸检测

val detectCode = faceDetectEngine.detectFaces(

faceByteArray,

bitMap.width,

bitMap.height,

FaceEngine.CP_PAF_BGR24,

faceInfoList

)

if (detectCode == 0) {

//人脸剖析

val faceProcessCode = faceDetectEngine.process(

faceByteArray,

bitMap.width,

bitMap.height,

FaceEngine.CP_PAF_BGR24,

faceInfoList,

FaceEngine.ASF_AGE or FaceEngine.ASF_GENDER or FaceEngine.ASF_FACE3DANGLE

)

//剖析成功

if (faceProcessCode == ErrorInfo.MOK && faceInfoList.isNotEmpty()) {

//识别到的人脸特征

val currentFaceFeature = FaceFeature()

//人脸特征分析

val res = faceDetectEngine.extractFaceFeature(

faceByteArray,

bitMap.width,

bitMap.height,

FaceEngine.CP_PAF_BGR24,

faceInfoList[0],

currentFaceFeature

)

//人脸特征分析成功

if (res == ErrorInfo.MOK) {

Log.d(

"!!人脸转换耗时",

"${System.currentTimeMillis() - detectStartTime}"

)

Schedulers.io().scheduleDirect {

emitter.onSuccess(globalMoshi.toJson(currentFaceFeature))

}

}

} else {

Log.d("ARCFACE", "face process finished , code is $faceProcessCode")

Schedulers.io().scheduleDirect {

emitter.onSuccess("")

}

}

} else {

Log.d(

"ARCFACE",

"face detection finished, code is " + detectCode + ", face num is " + faceInfoList.size

)

Schedulers.io().scheduleDirect {

emitter.onSuccess("")

}

}

}

})

}

}为实体数据绑定人脸特征数据

/**

* (虹软)通过人员人脸图片,获取带特征码人员列表

* */

@Synchronized

fun <T : IFaceDetect> detectPersonAvatarAndBindFaceFeatureCodeByArcSoft(

personListTemp: List<T>?

): Single<List<T>> {

return Observable.fromIterable(personListTemp)

.flatMapSingle { person ->

getArcFaceCodeByPicUrl(person.getAvatarUrl())

.map { arcFaceCodeJson ->

person.bindFaceCode(arcFaceCodeJson)

person

}

}

.toList()

.subscribeOn(Schedulers.io())

}从列表匹配出一个人

有了规范,我们就可以开始识别了,先写一个从列表识别出一个人的方法

/**

* (虹软)通过人脸图片识别匹配列表里的人类

* */

//所有匹配代码必须加上 Synchronized 注解,理由同 isFaceDetecting,保证同一时间只有一张图片在检测

@Synchronized

fun <T : IFaceDetect> matchHumanFaceListByArcSoft(

data: ByteArray,

width: Int,

height: Int,

previewWidth: Int? = null, //当你需要人脸在你得预览View中间时,请传入 previewWidth 以及 previewHeight,否则请不传或者传 null

previewHeight: Int? = null,

humanList: List<T>,

doOnMatchedHuman: (T) -> Unit,

doOnMatchMissing: (() -> Unit)? = null,

doFinally: (() -> Unit)? = null

) {

//正在检测或者距上次检测事件不足一秒,直接返回(当然你可以自定义时间间隔,将1秒改短或增长)

if (isFaceDetecting

|| System.currentTimeMillis() - lastFaceDetectingTime <= 1000

) return

//正在检测

isFaceDetecting = true

//上次检测时间

lastFaceDetectingTime = System.currentTimeMillis()

//人脸列表

val faceInfoList: List<FaceInfo> = mutableListOf()

//⼈脸检测

val detectCode = faceEngine.detectFaces(

data,

width,

height,

FaceEngine.CP_PAF_NV21,

faceInfoList

)

//检测失败或没有人脸,直击结束检测

if (detectCode != 0 || faceInfoList.isEmpty()) {

Log.d(

"ARCFACE",

"face detection finished, code is " + detectCode + ", face num is " + faceInfoList.size

)

doFinally?.invoke()

isFaceDetecting = false

return

}

//人脸剖析

val faceProcessCode = faceEngine.process(

data,

width,

height,

FaceEngine.CP_PAF_NV21,

faceInfoList,

FaceEngine.ASF_AGE or FaceEngine.ASF_GENDER or FaceEngine.ASF_FACE3DANGLE

)

//剖析失败

if (faceProcessCode != ErrorInfo.MOK) {

Log.d("ARCFACE", "face process finished , code is $faceProcessCode")

doFinally?.invoke()

isFaceDetecting = false

return

}

//previewWidth和previewHeight不为空表示需要人脸在画面中间

val needAvatarInViewCenter =

previewWidth != null

&& previewHeight != null

&& isAvatarInViewCenter(faceInfoList[0].rect, previewWidth, previewHeight)

//previewWidth和previewHeight为空表示不需要人脸在画面中间

val doNotNeedAvatarInViewCenter = previewWidth == null && previewHeight == null

when {

(faceInfoList.isNotEmpty() && needAvatarInViewCenter)

|| (faceInfoList.isNotEmpty() && doNotNeedAvatarInViewCenter) -> {

}

else -> {//无人脸,退出匹配

doFinally?.invoke()

isFaceDetecting = false

return

}

}

//识别到的人脸特征

val currentFaceFeature = FaceFeature()

//人脸特征分析

val res = faceEngine.extractFaceFeature(

data,

width,

height,

FaceEngine.CP_PAF_NV21,

faceInfoList[0],

currentFaceFeature

)

//人脸特征分析失败

if (res != ErrorInfo.MOK) {

doFinally?.invoke()

isFaceDetecting = false

return

}

//进行遍历匹配

val matchedMeetingPerson = humanList.find {

val faceSimilar = FaceSimilar()

val startDetectTime = System.currentTimeMillis()

if (it.getFaceCodeJson() == null || it.getFaceCodeJson()!!.isEmpty()) {

return@find false

}

val compareResult =

faceEngine.compareFaceFeature(

globalMoshi.fromJson(it.getFaceCodeJson()),

currentFaceFeature,

faceSimilar

)

Log.d("单个参会人匹配耗时", "${System.currentTimeMillis() - startDetectTime}")

if (compareResult == ErrorInfo.MOK) {

Log.d("相似度", faceSimilar.score.toString())

faceSimilar.score > ARC_SOFT_VALUE_MATCHED

} else {

Log.d("对比发生错误", compareResult.toString())

false

}

}

if (matchedMeetingPerson == null) {

//匹配到的人为空

doOnMatchMissing?.invoke()

} else {

//匹配到的人

doOnMatchedHuman(matchedMeetingPerson)

}

}匹配单个人

/**

* (虹软)通过一个人脸图片识别匹配是否为某个人类

* */

@Synchronized

fun <T : IFaceDetect> matchHumanFaceSoloByArcSoft(

data: ByteArray,

width: Int,

height: Int,

previewWidth: Int? = null,

previewHeight: Int? = null,

human: T,

doOnMatched: (T) -> Unit,

doOnMatchMissing: (() -> Unit)? = null,

doFinally: (() -> Unit)? = null

) {

matchHumanFaceListByArcSoft(

data = data,

width = width,

height = height,

previewWidth = previewWidth,

previewHeight = previewHeight,

humanList = listOf(human),

doOnMatchedHuman = doOnMatched,

doOnMatchMissing = doOnMatchMissing,

doFinally = doFinally

)

}判断人脸是否在预览View的中间

/**

* 判断人脸是否在View的中间

* */

fun isAvatarInViewCenter(rect: Rect, previewWidth: Int, previewHeight: Int): Boolean {

try {

val minSX = previewHeight / 10f

val minZY = kotlin.math.abs(previewWidth - previewHeight) / 2 + minSX

val isLeft = kotlin.math.abs(rect.left) > minZY

val isTop = kotlin.math.abs(rect.top) > minSX

val isRight = kotlin.math.abs(rect.left) + rect.width() < (previewWidth - minZY)

val isBottom = kotlin.math.abs(rect.top) + rect.height() < (previewHeight - minSX)

if (isLeft && isTop && isRight && isBottom) return true

} catch (e: Exception) {

Log.e("ARCFACE", e.localizedMessage)

}

return false

}销毁引擎

/**

* 销毁人脸检测对象

* */

fun unInitArcFaceEngine() {

faceEngine.unInit()

}

/**

* 销毁图片分析对象

* */

fun unInitArcFaceDetectEngine() {

faceDetectEngine.unInit()

}获取BGR像素的工具

/**

* 提取图像中的BGR像素

* @param image

* @return

*/

fun getPixelsBGR(image: Bitmap): ByteArray? {

// calculate how many bytes our image consists of

val bytes = image.byteCount

val buffer = ByteBuffer.allocate(bytes) // Create a new buffer

image.copyPixelsToBuffer(buffer) // Move the byte data to the buffer

val temp = buffer.array() // Get the underlying array containing the data.

val pixels = ByteArray(temp.size / 4 * 3) // Allocate for BGR

// Copy pixels into place

for (i in 0 until temp.size / 4) {

pixels[i * 3] = temp[i * 4 + 2] //B

pixels[i * 3 + 1] = temp[i * 4 + 1] //G

pixels[i * 3 + 2] = temp[i * 4] //R

}

return pixels

}关于上面用到的序列化,我将序列化工具的代码也贴出来吧,方便大家直接copy使用

序列化的扩展工具(Moshi的扩展方法,ModelUtil)

import com.squareup.moshi.JsonAdapter

import com.squareup.moshi.Moshi

import com.squareup.moshi.Types

import java.lang.reflect.Type

inline fun <reified T> String?.fromJson(moshi: Moshi = globalMoshi): T? =

this?.let { ModelUtil.fromJson(this, T::class.java, moshi = moshi) }

inline fun <reified T> T?.toJson(moshi: Moshi = globalMoshi): String =

ModelUtil.toJson(this, T::class.java, moshi = moshi)

inline fun <reified T> Moshi.fromJson(json: String?): T? =

json?.let { ModelUtil.fromJson(json, T::class.java, moshi = this) }

inline fun <reified T> Moshi.toJson(t: T?): String =

ModelUtil.toJson(t, T::class.java, moshi = this)

inline fun <reified T> List<T>.listToJson(): String =

ModelUtil.listToJson(this, T::class.java)

inline fun <reified T> String.jsonToList(): List<T>? =

ModelUtil.jsonToList(this, T::class.java)

object ModelUtil {

inline fun <reified S, reified T> copyModel(source: S): T? {

return fromJson(

toJson(

any = source,

classOfT = S::class.java

), T::class.java

)

}

fun <T> toJson(any: T?, classOfT: Class<T>, moshi: Moshi = globalMoshi): String {

return moshi.adapter(classOfT).toJson(any)

}

fun <T> fromJson(json: String, classOfT: Class<T>, moshi: Moshi = globalMoshi): T? {

return moshi.adapter(classOfT).lenient().fromJson(json)

}

fun <T> fromJson(json: String, typeOfT: Type, moshi: Moshi = globalMoshi): T? {

return moshi.adapter<T>(typeOfT).fromJson(json)

}

fun <T> listToJson(list: List<T>?, classOfT: Class<T>, moshi: Moshi = globalMoshi): String {

val type = Types.newParameterizedType(List::class.java, classOfT)

val adapter: JsonAdapter<List<T>> = moshi.adapter(type)

return adapter.toJson(list)

}

fun <T> jsonToList(json: String, classOfT: Class<T>, moshi: Moshi = globalMoshi): List<T>? {

val type = Types.newParameterizedType(List::class.java, classOfT)

val adapter = moshi.adapter<List<T>>(type)

return adapter.fromJson(json)

}

}相机的扩展工具

import android.app.Activity

import android.content.Context

import android.content.res.Configuration

import android.graphics.ImageFormat

import android.hardware.Camera

import android.hardware.camera2.CameraManager

import android.os.Build

import android.util.Log

import android.view.Surface

import android.view.SurfaceHolder

import androidx.annotation.RequiresApi

import kotlin.math.abs

private var resultWidth = 0

private var resultHeight = 0

var cameraId:Int = 0

/**

* 打开相机

* */

@RequiresApi(Build.VERSION_CODES.LOLLIPOP)

fun openCamera(

context: Context,

width: Int = 800,

height: Int = 600,

doOnPreviewCallback: (ByteArray?, Camera?, Int, Int) -> Unit

): Camera {

Camera.getNumberOfCameras()

(context.getSystemService(Context.CAMERA_SERVICE) as CameraManager).cameraIdList

cameraId = findFrontFacingCameraID()

val c = Camera.open(cameraId)

initParameters(context, c, width, height)

c.setPreviewCallback { data, camera ->

doOnPreviewCallback(

data,

camera,

resultWidth,

resultHeight

)

}

return c

}

private fun findFrontFacingCameraID(): Int {

var cameraId = -1

// Search for the back facing camera

val numberOfCameras = Camera.getNumberOfCameras()

for (i in 0 until numberOfCameras) {

val info = Camera.CameraInfo()

Camera.getCameraInfo(i, info)

if (info.facing == Camera.CameraInfo.CAMERA_FACING_FRONT) {

Log.d("CAMERA UTIL", "Camera found ,ID is $i")

cameraId = i

break

}

}

return cameraId

}

/**

* 设置相机参数

* */

fun initParameters(

context: Context,

camera: Camera,

width: Int,

height: Int

) {

//获取Parameters对象

val parameters = camera.parameters

val size = getOptimalSize(context, parameters.supportedPreviewSizes, width, height)

parameters?.setPictureSize(size?.width ?: 0, size?.height ?: 0)

parameters?.setPreviewSize(size?.width ?: 0, size?.height ?: 0)

resultWidth = size?.width ?: 0

resultHeight = size?.height ?: 0

//设置预览格式getOptimalSize

parameters?.previewFormat = ImageFormat.NV21

//对焦

parameters?.focusMode = Camera.Parameters.FOCUS_MODE_FIXED

//给相机设置参数

camera.parameters = parameters

}

/**

* 释放相机资源

* */

fun Camera?.releaseCamera() {

if (this != null) {

//停止预览

stopPreview()

setPreviewCallback(null)

//释放相机资源

release()

}

}

/**

* 获取相机旋转角度

* */

fun getDisplayRotation(activity: Activity): Int {

val rotation = activity.windowManager.defaultDisplay

.rotation

when (rotation) {

Surface.ROTATION_0 -> return 0

Surface.ROTATION_90 -> return 90

Surface.ROTATION_180 -> return 180

Surface.ROTATION_270 -> return 270

}

return 90

}

/**

* 设置预览展示角度

* */

fun setCameraDisplayOrientation(

activity: Activity,

camera: Camera

) {

// See android.hardware.Camera.setCameraDisplayOrientation for

// documentation.

val info = Camera.CameraInfo()

Camera.getCameraInfo(cameraId, info)

val degrees = getDisplayRotation(activity)

var result: Int

if (info.facing == Camera.CameraInfo.CAMERA_FACING_FRONT) {

result = (info.orientation + degrees) % 360

result = (360 - result) % 360 // compensate the mirror

} else { // back-facing

result = (info.orientation - degrees + 360) % 360

}

camera.setDisplayOrientation(result)

}

/**

* 开始相机预览

* */

fun Camera.startPreview(surfaceHolder: SurfaceHolder) {

//根据所传入的SurfaceHolder对象来设置实时预览

setPreviewDisplay(surfaceHolder)

startPreview()

}

/**

* 选取与width、height比例最接近的、设置支持的size

* @param context

* @param sizes 设置支持的size序列

* @param w 相机预览视图的width

* @param h 相机预览视图的height

* @return

*/

private fun getOptimalSize(

context: Context,

sizes: List<Camera.Size>,

w: Int,

h: Int

): Camera.Size? {

val ASPECT_TOLERANCE = 0.1 //阈值,用于选取最优

var targetRatio = -1.0

val orientation = context.resources.configuration.orientation

//保证targetRatio始终大于1,因为size.width/size.height始终大于1

if (orientation == Configuration.ORIENTATION_PORTRAIT) {

targetRatio = h.toDouble() / w

} else if (orientation == Configuration.ORIENTATION_LANDSCAPE) {

targetRatio = w.toDouble() / h

}

var optimalSize: Camera.Size? = null

var minDiff = Double.MAX_VALUE

val targetHeight = w.coerceAtMost(h)

for (size in sizes) {

val ratio = size.width.toDouble() / size.height

//若大于了阈值,则继续筛选

if (abs(ratio - targetRatio) > ASPECT_TOLERANCE) {

continue

}

if (abs(size.height - targetHeight) < minDiff) {

optimalSize = size

minDiff = abs(size.height - targetHeight).toDouble()

}

}

//若通过比例没有获得最优,则通过最小差值获取最优,保证至少能得到值

if (optimalSize == null) {

minDiff = Double.MAX_VALUE

for (size in sizes) {

if (abs(size.height - targetHeight) < minDiff) {

optimalSize = size

minDiff = abs(size.height - targetHeight).toDouble()

}

}

}

return optimalSize

}本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!